Making Cancer Imaging Smarter: Emerging Techniques and Computational Outlook to Guide Precision Diagnostics

Abstract

Medical imaging plays an indispensable role across the entire cancer care continuum, from screening and early detection to diagnosis, staging, treatment planning, and monitoring. While conventional imaging modalities like CT, MRI, and PET provide anatomical tumor delineation, innovative computational analysis approaches are beginning to extract novel quantitative imaging biomarkers that offer information beyond qualitative evaluation alone. The promise of Artificial Intelligence (AI) techniques lies in uncovering clinically actionable insights and patterns embedded within the massive trove of medical images, thereby enabling more accurate and personalized cancer management. This review examines the emerging role of AI, specifically deep learning approaches like convolutional neural networks (CNNs), U-Nets, and generative adversarial networks (GANs), for diverse cancer imaging applications spanning the diagnostic, prognostic, and therapeutic domains. Established and cutting-edge techniques are reviewed toward precise, effective integration into clinical practice. An overview of conventional anatomical imaging modalities that currently represent the standard-of-care for oncologic diagnosis and treatment planning is first provided, highlighting CT, MRI, PET, and ultrasound imaging. Subsequently, advanced computational analytics approaches leveraging AI and deep learning for automated analysis of medical images are reviewed in depth, including key techniques like radiomics, tumor segmentation, and predictive modeling. Emerging studies showcase the remarkable potential for AI-powered imaging analytics to discern subtle phenotypic patterns, quantify tumor morphology, and integrate findings with genomic data for precision cancer management. However, thoughtful validation is indispensable before clinical integration. Nascent deep learning techniques offer tremendous promise to uncover previously inaccessible insights from medical imaging big data that can guide individualized cancer diagnosis, prognosis, and treatment planning. However, careful translation of these powerful technologies by multidisciplinary teams of clinicians, imagers, and data scientists focused on evidence-based improvements in patient care is crucial to realize their full potential.

1. INTRODUCTION

Medical imaging techniques such as magnetic resonance imaging (MRI), computed tomography (CT), ultrasound, and digital pathology are indispensable in modern healthcare. They play crucial roles in screening, diagnosis, treatment planning, and prognosis assessment. The surge in medical image data underscores the need for advanced analysis methods to derive clinically valuable insights [1]. Key clinical applications where medical image analysis is pivotal include oncology, neurology, cardiology, digital pathology, ophthalmology, and radiotherapy planning [2]. Advanced imaging modalities, including MRI, positron emission tomography (PET), and CT, are essential for early disease detection, personalized medicine, image-guided interventions, and treatment monitoring. PET imaging, utilizing novel radiotracers like PSMA for prostate cancer and FDG for tumor metabolism, is crucial for accurate staging, treatment planning, and response assessment. The integration of PET/MRI combines MRI's superior soft tissue contrast with PET's functional data, enhancing applications in neurology and oncology. MRI remains a cornerstone in neuroimaging and musculo- skeletal diagnostics due to its excellent soft tissue differentiation and absence of ionizing radiation. Ultrahigh-field MRI (7T and above) offers improved spatial resolution and image quality, enabling detailed visualization of anatomical structures.

Image-guided interventions using CT, MRI, or ultrasound facilitate precise therapy delivery to target tissues while preserving surrounding healthy structures. Techniques such as cryoablation, radiofrequency ablation, high-intensity focused ultrasound (HIFU), irreversible electroporation, and therapeutic radiation increasingly rely on image guidance [3]. Radiomics extracts quanti- tative imaging biomarkers from CT and MRI to predict prognosis or treatment response [4]. Digital pathology uses image analysis to screen histopathology slides for cancer metastasis, with some deep-learning algorithms surpassing pathologist-level accuracy [3]. In radiotherapy treatment planning, image analysis is vital for segmenting target volumes and organs at risk, with adaptive planning accommodating anatomical changes during therapy [5]. Machine learning and artificial intelligence are incre- asingly applied in medical imaging for tasks such as image reconstruction, automated quality control, computer-aided detection and diagnosis, radiation therapy planning, and predictive analytics. The rapid evolution of medical imaging technology aims to enhance diagnostic confi- dence, enable personalized medicine, and provide minimally invasive targeted therapies [1]. Progress in machine learning, coupled with regulatory approvals, is solidifying the role of medical image analysis in advancing healthcare.

Despite these advancements, current imaging techniques face several limitations and challenges. MRI, while offering excellent soft tissue contrast, can be expensive and time-consuming. CT scans, although fast and widely available, expose patients to ionizing radiation. Ultrasound is operator dependent and may provide limited resolution in certain contexts. Digital pathology, though promising, requires significant computational resources and data storage. Emerging developments in medical imaging, particularly the integration of machine learning and artificial intelligence, promise to enhance diagnostic performance. These technologies are being harnessed to develop novel imaging biomarkers, predictive models, and decision support systems aimed at precision diagnosis and prognosis assessment in oncology. However, the imple- mentation of AI in clinical practice requires robust validation, regulatory approval, and integration with existing healthcare systems. Ethical considerations, such as data privacy and algorithm transparency, also pose significant challenges.

The present study aims to provide a comprehensive overview of the diverse medical imaging techniques currently employed in oncologic diagnosis, emphasizing their respective advantages and limitations. Additionally, it explores emerging innovations in medical imaging utilizing AI and machine learning. This review examines the expanding role of computational analytics and deep learning approaches in creating novel imaging biomarkers and predictive models, ultimately contributing to precision diagnosis and prognosis assessment of cancer. The goal is to synthesize state-of-the-art medical imaging for cancer, offering a holistic perspective that encompasses both traditional modalities and cutting-edge techniques. This includes addressing key challenges and opportunities that warrant further research to translate enhanced imaging methods into clinical practice effectively. Additionally, the review aims to highlight areas where further investigation is needed to overcome current limitations and fully realize the potential of advanced imaging technologies in healthcare.

In summary, while medical imaging has transformed healthcare, ongoing research, and innovation are essential to address current challenges and limitations. The integration of AI and machine learning holds promise for enhancing diagnostic accuracy and efficiency, paving the way for more personalized and effective patient care. However, careful consideration of ethical, regulatory, and practical aspects is crucial to ensure these technologies are implemented safely and effectively in clinical settings. Continued collaboration between researchers, clinicians, and industry stakeholders will be vital in driving the future of medical imaging forward.

2. MEDICAL IMAGING AND CANCER

Medical imaging techniques, including X-ray, CT, MRI, and PET scans, allow visualization of internal anatomy and are critical for detecting, diagnosing, and staging cancer [6] (Fig. 1). However, interpreting these complex scans thoroughly can be challenging, even for expert radiologists and pathologists. Tumors, especially when small or at early stages, may be overlooked in complex imaging studies that involve multiple structures [3]. This is a key area where medical image analysis has become invaluable by leveraging computer software to process images and assist in cancer detection and evaluation [1]. Image analysis techniques can process patient scans to highlight suspicious regions that may contain malignant lesions for closer inspection [4]. This computer assistance acts as a second set of unbiased eyes to help spot potential tumors that a clinician may initially miss on the scan, improving sensitivity. Image analysis can also access characteristics of detected tumors such as shape, margins, texture patterns, and blood flow kinetics through quantitative feature extraction [1]. This aids in determining if a mass is likely malignant or benign. Beyond visualizing the primary tumor, image analysis enables efficient combing through gigapixel digitized pathology slides to identify metastatic lesions [3]. For diagnosed cancers, image analysis can delineate tumor boundaries and measure volume, as well as track changes over the treatment course. Automated image segmentation and registration allow precision mapping of the tumor region being irradiated in radiotherapy planning [6].

Common types of medical imaging systems used in cancer diagnosis.

Dealing with specific types of cancer;

• For breast cancer screening and diagnosis, mammography remains the mainstay modality, though breast MRI is increasingly used as an adjunct in high-risk women and to assess the extent of disease. Stereotactic biopsy guided by imaging is integral to diagnosis, and breast MRI has been shown to detect additional malignant lesions in 20-40% of women with newly diagnosed breast cancer.

• In lung cancer, low-dose CT is the recommended screening test for high-risk individuals, with a 20% reduction in mortality in the screened group in the landmark NLST trial. PET/CT is the standard for staging and radiotherapy planning, while endobronchial ultrasound enables minimally invasive lymph node sampling.

• MRI and transrectal ultrasound (TRUS) are utilized to diagnose and stage prostate cancer. Multiparametric MRI incorporating T2, diffusion, and dynamic contrast-enhanced sequences achieves a sensitivity of 80-90% in detecting significant prostate lesions. MRI-TRUS fusion targeted biopsy using co-registered images significantly improves prostate cancer detection compared to standard biopsy.

• For gastrointestinal cancers, CT enterography and MR enterography assess small bowel lesions with high accuracy. Endoscopic ultrasound is also playing an increasing role in upper GI cancer staging. PET/CT is routinely used for staging, treatment response evaluation, and surveillance in several GI malignancies.

• In gynecologic cancers, MRI has become integral in cervical cancer for assessing tumor size, parametrial invasion, and nodal metastases with a reported accuracy of 80-95%. Pelvic MRI is also first-line for staging ovarian cancer, while transvaginal ultrasound is widely employed for ovarian screening and evaluation of adnexal masses.

Specifically, functional imaging is redefining paradigms in cancer management. Diffusion weighted MRI offers added value for lesion detection and characteri- zation in numerous cancer types. PET imaging with novel tracers like PSMA and FAPI analogues is dramatically improving staging accuracy in prostate and breast cancers, respectively. Overall, medical image analysis enhances cancer diagnosis by aiding detection, reducing interpretation oversight errors, unlocking quantitative insights, and standardizing evaluation. Recent artificial intelligence advances like deep learning are poised to make image analysis an integral part of the clinical workflow, helping clinicians provide more accurate diagnoses and personalized care for patients [2].

The following sections cover an in-depth overview of established and emerging medical imaging techniques utilized in cancer research and clinical practice, highlighting their key capabilities, clinical applications, and latest innovative developments.

2.1. MRI for Cancer Diagnosis

Magnetic resonance imaging (MRI) continues to evolve as an essential tool for cancer imaging, providing superb soft tissue contrast and functional information unpara- lleled by other modalities. Recent technical advances have further expanded the capabilities of MRI for characterizing tumor morphology, stage, and biology.

Magnetic resonance imaging (MRI) is briefly a medical imaging technique that uses strong magnetic fields and radio waves to generate detailed images of the body's anatomy and physiology. The key components of an MRI scanner include a large magnet, gradient coils, radio- frequency coils, and a computer system. The magnet aligns protons in the body, the gradient coils alter the magnetic field to localize signals, and the RF coils transmit and receive radio pulses that are converted into images by the computer. Moreover, to acquire an image, the patient is positioned inside the MRI scanner bore, and radio frequency pulses are delivered at the proton resonant frequency, causing the protons to flip their spin alignment. When the pulse stops, the protons relax back, releasing energy that is detected by the RF coils. The relaxation times provide tissue contrast. MRI provides excellent soft tissue contrast and is used to image organs, tumors, joints, and more without ionizing radiation. Advances continue to accelerate scanning and enhance image quality.

2.1.1. Recent Advances in Magnetic Resonance Imaging for Oncologic Applications

Novel quantitative techniques, including diffusion kurtosis imaging (DKI) and intravoxel incoherent motion (IVIM), are currently being explored to provide additional microstructural and perfusion data to improve cancer assessment beyond standard diffusion weighted and perfusion imaging. Early DKI and IVIM studies indicate they can discern subtle changes in tissue micro- environments and measure vascularity in ways that may boost diagnostic accuracy and treatment response monitoring for certain cancers [7, 8]. Exciting deve- lopments in molecular MRI are also on the horizon, with new contrast mechanisms like chemical exchange saturation transfer (CEST) and hyperpolarized xenon-129 now under investigation for cancer imaging [9, 10]. CEST provides information about tumor pH and metabolism by detecting exchangeable proton groups, while xenon-129 MRI can sensitively probe the hypoxic tumor micro- environment. These emerging molecular MRI approaches may someday allow non-invasive imaging of cancer biomarkers and hallmarks currently only assessable through biopsy and histopathology.

In parallel, radiomics and radiogenomics research has opened new avenues for converting standard and quantitative MRI data into imaging biomarkers through high-throughput feature extraction and data-mining [11, 12]. By revealing MRI-based signatures associated with tumor genotype and phenotype, these quantitative imaging methods are bringing MRI closer to personalized medicine applications. While MRI is continuously evolving as a cancer imaging tool, challenges and open questions remain. Larger comparative effectiveness trials are still needed to define how new multi-parametric MRI techniques can optimally be integrated into clinical workflows. Continued exploration of how MRI can be combined with other modalities like PET and US for hybrid imaging is another active area of research [13, 14]. Ultimately, advancing MRI technology through clinical validation studies will help drive the adoption of these cutting-edge capabilities to improve cancer diagnosis and treatment.

2.2. CT in Cancer Diagonosis

Computed tomography (CT) remains a widely used and indispensable tool for cancer imaging due to its availability, fast scan times, and reasonable cost profile [15]. Conventional CT provides high-resolution anatomical images to delineate tumors and involved anatomy, guide biopsies, plan radiation therapy, and monitor treatment response [16]. However, studies show substantial variability in CT utilization and radiation exposure across different healthcare systems [17].

Computed tomography (CT) is a medical imaging technique that uses X-rays and computer processing to create cross-sectional images of the body. The key components of a CT scanner include an X-ray tube that produces a narrow beam of X-rays, a detector array opposite the tube to measure attenuation, and a motorized gantry to rotate the X-ray source and detector around the patient. As the beam passes through the body, it is attenuated depending on the density of structures. The detector data is processed by a computer to reconstruct axial slice images. CT provides excellent visualization of bones, blood vessels, and organs. Contrast agents are often used to enhance the visualization of soft tissues. The CT images allow the evaluation of anatomy in detail for diagnostic purposes. Recent advances like multislice spiral CT have enabled faster scanning times and improved spatial resolution. Overall, CT is a vital radiologic technique that produces high resolution 3D views of internal structures.

2.2.1. Recent Advances in Computed Tomography for Oncologic Applications

Contrast enhanced CT improves cancer detection through intravenous iodinated agents that highlight vascular differences in malignant versus normal tissues [18]. A meta-analysis found that contrast-enhanced CT had a pooled sensitivity of 83% and specificity of 84% for detecting liver metastases across 36 studies [19]. For pancreatic cancer staging, one multicenter trial reported 98% accuracy for the T stage and 92% accuracy for the N stage with contrast-enhanced CT compared to histopath- ology [20]. Dual-energy CT allows material decomposition analysis by acquiring two image sets at different kV levels, enhancing tissue characterization [21]. A 2022 study achieved 97% accuracy in detecting lymph node metastases with dual-energy CT in head and neck cancers [22]. Dual-energy CT also improved the detection of bone marrow metastases from prostate cancer [23]. CT perfusion enables quantitative, functional assessment of tumor angiogenesis and hemodynamics using dynamic contrast tracking [24]. A meta-analysis reported pooled sensitivities of 94% and specificities of 95% for differentiating lung cancer from benign nodules using CT perfusion [25]. Studies also show CT perfusion helps predict treatment response in cancers like liver metastases.

Radiomics research has defined quantitative imaging signatures from routine CTs associated with lung cancer survival and genotype [26]. Ongoing work applies deep learning to extract further value from CTs through techniques like image reconstruction to reduce noise and artifacts while lowering radiation dose. Overall, CT remains indispensable for cancer diagnosis while rapidly evolving through new functional imaging approaches, quantitative analytics, and computational methods that promise to further expand CT’s capabilities. Continued research and technical advances will strengthen CT's role in precision oncology.

2.3. Mammography in Cancer Diagnosis

Mammography using low-dose X-rays is the primary screening tool for early breast cancer detection, with numerous randomized trials validating its mortality benefit [27, 28]. However, mammography sensitivity is lower in dense breasts, estimated at just 47-64% [29]. Supple- mental ultrasound in dense breasts improves cancer detection but increases false positives [30].

Mammography is an X-ray imaging technique specialized for breast cancer screening and diagnosis. The key components of a mammography system include an X-ray tube with molybdenum or tungsten target material to generate X-rays, compression paddles to immobilize and flatten the breast, an anti-scatter grid to reduce scattered radiation, and a digital detector to capture the image. During a mammogram, the breast is compressed while X-rays are emitted from the tube through the breast tissue onto the detector. The X-ray attenuation pattern produces a 2D projection image highlighting the internal breast structure. Higher-density cancerous tissues absorb more X-rays than normal fibroglandular tissue and fat, enabling tumor visualization. Digital detectors have replaced film for better contrast resolution to identify micro- calcifications. Dedicated mammography x-ray spectra, compression, and small focal spot optimize image quality while minimizing dose. Bilateral craniocaudal and mediolateral oblique views are obtained to maximize coverage of breast tissue. Mammography produces high-resolution breast imaging to detect clinically occult tumors at early curable stages, making it the foundation of breast cancer screening.

2.3.1. Recent Advances in Mammography for Oncologic Applications

Digital mammography now predominates over the film, offering better contrast resolution to identify micro- calcifications and tissue density changes [31]. Digital breast tomosynthesis reduces tissue overlap issues by providing cross-sectional 3D reconstructed images from multiple angles [32]. A 2021 study of over 1 million women found that artificial intelligence (AI) assistance with digital mammography improved breast cancer detection across all breast densities [33]. Another study showed that AI could identify cancers that were missed on prior mamm- ograms, enabling earlier diagnosis [34]. Tomosynthesis has transformed breast cancer screening. A 2021 randomized trial of over 500,000 women demonstrated that tomosynthesis reduced false positives and increased cancer detection compared to digital mammography alone. Multiple studies confirm that tomosynthesis boosts sensitivity without more recalls [35]. Contrast-enhanced mammography utilizes intravenous iodinated contrast to detect tumor angiogenesis. A multicenter trial found it improved sensitivity from 50% to 81% in extremely dense breasts compared to digital mammography and tomosynthesis [36]. Emerging tools like dedicated breast CT and positron emission mammography may further augment breast cancer staging and diagnosis [37]. Overall, mammography remains the foundation of breast cancer screening and diagnosis. Recent advances in AI, tomosynthesis, targeted ultrasound, contrast imaging, and hybrid modalities aim to address limitations and continuously enhance breast cancer evaluation.

2.4. Histopathology in Cancer Diagnosis

Histopathology involves microscopic examination of tissue specimens to diagnose and characterize disease. In cancer, histopathology is considered the gold standard for definitive diagnosis, grading, and staging [38]. Histo- pathology involves microscopic examination of tissue specimens to diagnose disease. The main components include tissue processing equipment, microtomes, glass slides, and light microscopes. In the histology lab, excised tissues are fixed, dehydrated, cleared, infiltrated with wax, and embedded into tissue blocks. Thin slices are cut from the blocks using a microtome and affixed onto glass slides. Staining with hematoxylin and eosin (H&E) enables visualization of tissue architecture and morphology. IHC staining can also be used to detect specific proteins. The prepared slides are then examined under a light micro- scope connected to a digital camera and monitored for pathological analysis. Histopathology provides micro- scopic visualization of diseased tissues down to the cellular level. For cancer, key features assessed include type, grade, stage, and margins. Ongoing advances in slide digitization, multiplexed staining, and digital microscopy are enhancing and automating histopathological examination.

Conventional histopathology utilizes hematoxylin and eosin (H&E) staining and light microscopy. However, research shows that interobserver variability exists bet- ween pathologists who analyze H&E slides [39]. Immuno- histochemistry (IHC) uses antibodies to stain specific proteins in tissues, improving sensitivity and objectivity. A 2022 study found a deep learning model analyzing IHC slides classified lung cancer subtypes with 95% accuracy vs 86% for pathologists. Digital pathology enables whole slide scanning for computer-aided diagnosis. Algorithms can quantify features undetectable by the eye. A 2022 study reported that an AI model predicted breast cancer recurrence from digitized H&E slides with greater accuracy than pathologists [40]. Multiplex immuno- fluorescence preserves tissue architecture while staining multiple targets. Quantitative imaging better delineate the tumor microenvironment [41]. However, few multiplex panels are clinically validated. Genomic tests like Oncotype Dx, which analyze gene expression, add prognostic information beyond histopathology alone for breast and other cancers. Integrating histopathology with genomics and radiomics shows promise for precision oncology [42]. In summary, while histopathology remains the gold standard for cancer diagnosis, technical advances and computational pathology are enhancing the objectivity and prognostic abilities of tissue analysis. Standardization of emerging tools in larger clinical trials is still needed.

2.5. Positron Emission Tomography in Cancer Diagnosis

Positron emission tomography (PET) imaging is a powerful nuclear medicine technique commonly used for cancer diagnosis. PET visualizes the biochemical activity, which often precedes anatomical changes. Additionally, by imaging the metabolic processes, PET can differentiate benign from malignant lesions, detect metastases, and assess the extent of tumor spread beyond what conventional imaging shows.

Briefly, to explain Positron emission tomography (PET) is a molecular imaging technique that involves injecting a radioactive tracer like fluorodeoxyglucose (FDG) and using gamma cameras to visualize its biodistribution. As FDG accumulates preferentially in metabolically active cancer cells, PET scanning detects biochemical function that often precedes morphological changes. Dedicated PET systems consist of a ring of detectors encircling the patient to register coincident 511 keV gamma rays origi- nating from positron annihilation. Tomographic recons- truction algorithms generate 3D datasets highlighting areas of increased radiotracer uptake. Hybrid PET/CT scanners combine molecular PET information with anatomical CT detail. PET's exquisite sensitivity due to the lack of background anatomical noise enables the detection of small, early-stage malignant lesions and metastases not seen on conventional imaging. Whole-body PET/CT is now routine for cancer screening, initial staging, and recurrence monitoring across multiple indications, though specificity limitations require histopathological confirmation.

2.5.1. Recent Advances in PET for Oncologic Applications

2.5.1.1. Novel PET Radiotracers

A wide array of novel PET tracers are currently under investigation to improve tumor characterization, staging, and treatment response assessments. For example, gallium-68 (Ga-68) prostate-specific membrane antigen (PSMA) ligands such as Ga-68-PSMA-11 have demons- trated excellent sensitivity for prostate cancer lymph node staging versus conventional imaging in early clinical studies. However, as Calais et al. [2021] [43] discuss, larger multi-center trials are still needed to fully validate improved patient management outcomes. Similar cautions surround other emerging PET tracers like FAPI-04 for imaging fibroblast activation protein expression. Early clinical studies report promising capabilities for therapeutic monitoring in cancers like sarcoma, but phase 3 trials must still confirm clinical utility. Overall, while novel PET radiotracers are exciting avenues of research, the leap to validated clinical impact remains a work in progress.

2.5.1.2. Hybrid PET/MRI

Hybrid PET/MRI systems enable simultaneous PET and MRI scanning for combined anatomical and functional information. As described by Bailey et al. [2016] [13], integrated PET/MRI has shown initial promise for applications including prostate cancer localization. However, a recent systematic review by Sauter et al. [2022] [44] found limited data from high-quality clinical trials supporting definitive advantages of PET/MRI over standard sequential imaging with PET/CT. Key questions remain around validating improved patient outcomes, cost-effectiveness, and optimal clinical protocols. Larger clinical trials are thus critical for further defining the appropriate evidence-based role of PET/MRI, given the substantial infrastructure costs associated. Overall, advancing PET technologies offer intriguing potential for augmenting cancer assessment but require extensive validation [45]. Early clinical findings show promise, but experiences from past novel imaging modalities advise caution until large-scale trials in diverse settings confirm clinical benefit. As research continues, maintaining rigorous assessment of patient impact rather than technical potential alone will be key to ensuring these innovations positively transform oncologic care.

3. EVOLUTION OF CONVOLUTIONAL NEURAL NETWORKS (CNNS) IN MEDICAL IMAGE ANALYSIS

Convolutional neural networks (CNNs) have become a dominant machine learning approach for medical image analysis in cancer applications. CNNs leverage convolutional filters to automatically extract spatial features from images through hierarchical layers of representation learning [2]. Convolutional neural networks (CNNs) are a specialized type of artificial neural network well-suited for processing pixel data such as images. The key characteristics of CNNs are the convolution operations in the layers. A convolution layer consists of filters that slide over the input image to extract spatial features. Each filter acts as a feature detector, looking for specific patterns in the underlying pixels. By stacking multiple convolutional layers, CNNs build up a hierarchical feature representation of the image data. Lower layers detect simple edges and shapes, while higher layers assemble these into complex motifs. CNNs also utilize pooling layers to downsample feature maps and reduce computational requirements. The convolutional architecture enables CNNs to efficiently identify visual patterns and objects without any human guidance. Unlike other image analysis techniques, CNNs automatically learn relevant features directly from the raw pixel data using back propagation and gradient descent during training. Large labeled image datasets teach CNN to recognize predictive imaging signatures. CNNs have become the dominant approach in medical image analysis and computer vision due to their self-learned feature extraction capabilities. A key advantage of CNNs is their ability to discover descriptive patterns directly from pixel data, eliminating the need for manual feature engineering.

3.1. Recent Advances in CNNs Applications for Oncologic Studies

Studies have applied CNNs to a diverse range of cancer imaging tasks, including detection, segmentation, classification, and prognosis prediction across modalities such as mammography, histopathology, CT, and MRI [3]. For example, a 2017 study developed a deep CNN for multi-class classification of lung cancer subtypes using histopathology slides, achieving an accuracy of 96.4% vs. 61.4% for a baseline model. Another study applied an ensemble of CNNs to predict EGFR mutation status from lung CT images with 92.4% accuracy [46]. Recent advances have adapted CNNs for volumetric medical data, using 3D convolutional filters to incorporate spatial context from MRI and CT volumes [47]. Studies have shown that 3D CNNs improve various performance metrics over 2D counterparts for brain, lung, and liver tumor segmentation [48]. Attention mechanisms are being integrated into CNN architectures to learn to focus on salient regions and critical features in medical images [49]. Graph neural networks are also emerging to effectively model nonlocal relationships in cancer imaging data [50]. Overall, CNNs underpin many state-of-the-art approaches for cancer detection, diagnosis, prognosis, and outcomes prediction across medical imaging modalities. Ongoing innovation in CNN variants, hybrid architectures, and self-supervision aims to enhance their representation learning capabilities and clinical impact further.

3.2. U-NET for Cancer Medical Image Analysis

U-Net is a deep learning architecture that was first introduced in 2015 and has become widely adopted for medical image segmentation in cancer applications. The defining feature of U-Nets is their encoder-decoder structure with skip connections between layers. The encoder gradually downsamples feature maps to capture context, while the decoder upsamples to recover spatial information lost during encoding. Skip connections concatentate encoder and decoder activations, enabling precise localization [51].

A key advantage of U-Nets is efficient end-to-end training from a few annotated samples by leveraging data augmentation and transfer learning. Studies have applied U-Nets to segment tumors, organs, and tissues across modalities, including histology, mammography, ultra- sound, CT, and MRI [52]. For example, a 2020 study utilized a U-Net to segment invasive ductal carcinoma from whole slide images, achieving a dice coefficient of 0.87 vs 0.83 for pathologists [53]. Another study employed a U-Net for liver tumor segmentation in CT scans, obtaining 95% dice similarity with expert radiologists [54]. 3D U-Nets extend the architecture to volumetric data, using 3D operations and filters to incorporate spatial context from CT and MRI volumes. Research shows that 3D U-Nets improve segmentation and classification performance compared to 2D U-Nets across applications [55].

3.2.1. Recent Cancer Imaging Applications of U-Nets

U-Nets have been extensively utilized for semantic segmentation in cancer imaging. Tasks include delineating tumor boundaries, detecting metastases, and segmenting organs at risk across modalities. In digital pathology, U-Nets segment nuclei, glands, and cellular structures from histology slides of tissues [53]. For mammography, U-Nets identify suspicious lesions and calcifications from breast imaging. With CT/MRI data, U-Nets can delineate tumor volumes and nearby organs for radiotherapy planning [55]. For ultrasound, U-Nets classify and localize ovarian cancer metastases [56].

Ongoing innovation in U-Net methodology aims to further improve cancer imaging performance. Attention U-Nets guide focus towards salient structures using attention gates [57]. Residual U-Nets add identity mappings between layers to improve gradient flow [58]. 3D U-Nets incorporate volumetric context from CT/MRI for improved segmentation [59]. Cascade and nested U-Nets enable stage-wise refinement of segmentation. Overall, U-Nets have been proven highly effective for precise anatomical delineation in cancer imaging. Ongoing innovation in architectures, loss functions, and incorporation of domain knowledge further bolsters their capabilities. U-Nets will continue enabling workflow integration of deep learning for cancer diagnostics.

3.3. Generative Adversarial Networks (GANS) for Cancer Medical Image Analysis

Generative adversarial networks (GANs) have emerged as a powerful generative modeling approach for unsupervised learning. Primarily introduced in 2014 by Goodfellow et al. [60], GANs leverage an adversarial training framework between two neural networks - a generator and a discriminator. The generator attempts to synthesize artificial data resembling the true data distribution, while the discriminator aims to differentiate real samples from the synthesized fakes. Through this adversarial competition, the networks drive each other to improve the generator produces increasingly realistic data to fool the discriminator, while the discriminator becomes an ever more discerning judge between the real and generated data. At equilibrium, the generator ideally learns enough about the true data distribution to create novel samples indistinguishable from real data. The intuitive minimax game behind GANs provides an elegant way to approximate target distributions without explicitly defining a loss function. This makes them highly effective for generating data lacking simple parametric descriptions, like natural images. GANs can learn to produce sharp, realistic samples after training on finite real datasets.

A key advantage of GANs is their applicability in cases where large training datasets are difficult to obtain, like medical imaging. GANs offer a pathway to generate synthetic data augmentation to improve modeling based on limited data. They can also synthesize diverse pathological cases to aid diagnosis. Ongoing GAN research aims to enhance the control and quality of generated medical data [60,61].

3.3.1. Recent Cancer Imaging Applications of GANs

GANs are well suited for tasks where large labeled medical datasets are challenging to obtain [61]. They can synthesize realistic malignant and benign lesions with annotated labels to expand limited clinical data. Studies have generated synthetic brain, lung, and breast tumors using GANs [62]. GANs can also create high-quality CT and MRI images from incomplete or sparse data, improving workflow efficiency. Research has shown that GAN-reconstructed brain MRI and cardiac CT are radiologically valid [63]. For image enhancement, GANs perform super-resolution to improve the resolution of ultrasound, MRI, and CT. They also enable noise reduction in low-dose CT and MRI. GANs can convert MRI to CT-like images via paired training to provide synthetic CT surrogates where real CT is unavailable [64]. As data augmentation, GANs efficiently expand limited medical image datasets by generating additional diverse plausible samples for improved modeling [65].

Recent methodological advances provide greater control over GAN synthesis. Conditional GANs enable user specification of desired output properties like tumor size and location [66]. Contextual GANs leverage semantic text/labels to generate consistent, realistic data [67]. CycleGANs allow unpaired cross-domain image translation, converting MRI to CT without matched pairs [68]. Self-supervised GANs create artificial labels from unlabeled data for semi-supervised learning [69]. In summary, GANs are driving rapid progress in cancer imaging by synthesizing realistic data to address limitations like scarce labeled datasets. Ongoing GAN innovation will further enhance their capabilities and facilitate their integration into clinical practice.

4. EMERGENCE OF ARTIFICIAL INTELLIGENCE IN CANCER MEDICAL IMAGING

The rapid advancements in artificial intelligence [AI] have ushered in a transformative era in the field of cancer imaging and diagnosis. Innovative AI algorithms have demonstrated remarkable capabilities in analyzing complex medical images, uncovering insights that can potentially surpass human interpretation [70, 71]. Early detection of cancer and accurate characterization of prognostic factors remain significant challenges in clinical practice. However, AI-powered systems offer the promise of automating and enhancing cancer screening, diagnosis, and treatment planning, revolutionizing the landscape of medical imaging.

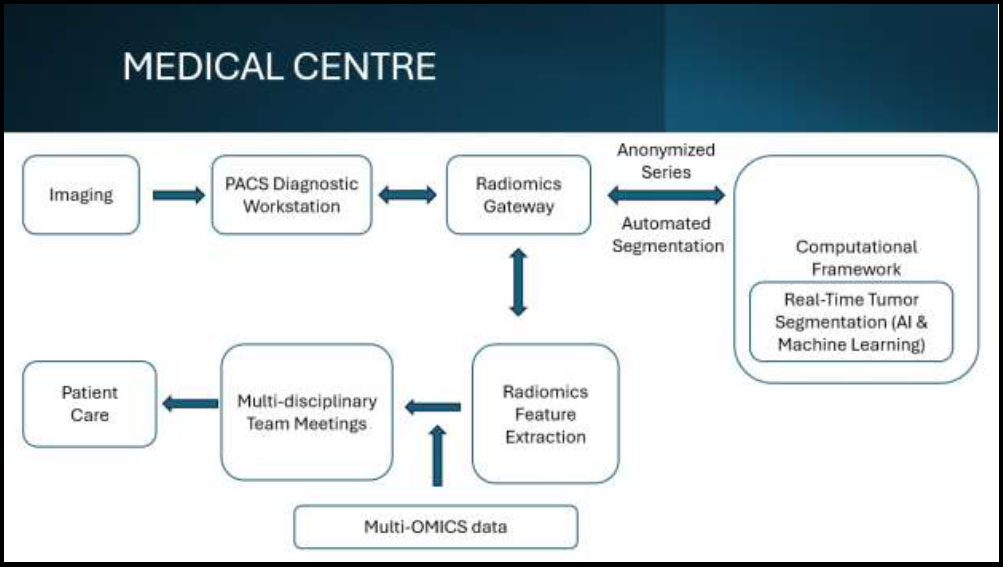

Despite the promising developments, it is crucial to maintain realistic expectations about the current evidence supporting these technologies and avoid premature adoption without rigorous validation. Carefully navigating the technical, ethical, and practical challenges will be essential for the successful integration of AI into cancer care. This section uniquely delves into the key applications of AI in cancer imaging, the ongoing challenges, and the emerging perspectives on the future direction of this rapidly evolving field (Fig. 2).

4.1. Key Applications of AI in Cancer Imaging

4.1.1. Computer-aided Detection (CADe)

One of the most prominent applications of AI in cancer imaging is computer-aided detection (CADe). AI algorithms are increasingly being leveraged for CADe, automatically flagging suspicious lesions on radiological scans that may otherwise be overlooked by clinicians. Numerous studies have demonstrated that AI can surpass expert accuracy in tasks such as lung nodule detection on CT scans and breast lesion identification on mammo- graphy [72, 73]. These deep learning-based methods leverage large annotated datasets to learn subtle imaging features that are predictive of cancer, thereby enhancing the early detection of malignancies.

4.1.2. AI-based Quantification of Prognostic Biomarkers

Another key application of AI in cancer imaging is the automated quantification of prognostic biomarkers. Radiomic analysis, a field that extracts thousands of quantitative descriptors related to tumor shape, texture, and kinetics, can uncover imaging features that are imperceptible to the human eye. These radiomic signatures, when correlated with genomic data, have shown promise in predicting therapy response and patient outcomes. Furthermore, AI-driven segmentation of tumor boundaries and organs at risk on radiotherapy planning CT/MRI scans can facilitate more precise radiation delivery. Real-time adaptation of radiation beams based on AI-segmented anatomy can further improve the accuracy and personalization of cancer treatment.

Flowchart of application of artificial intelligence & machine learning in cancer.

4.1.3. Digital Pathology

In the realm of digital pathology, AI has demonstrated the ability to accurately classify slides and detect cancer metastases, as well as subtype tumors based on morphological features. This automated analysis can assist pathologists in making faster and more accurate diagnoses, ultimately leading to more personalized cancer care. The potential of AI in digital pathology lies in its ability to enhance the accuracy, accessibility, and personalization of cancer diagnoses [74].

While the applications of AI in cancer imaging hold immense promise, there are significant challenges that must be addressed for successful integration into clinical practice. Thoughtful co-design of AI tools with clinicians will be crucial to responsibly translating these innovations and ensuring their seamless integration into existing workflows, ultimately improving patient outcomes.

4.2. Challenges and Considerations

4.2.1. Generalization Across Diverse Populations

A major challenge in the deployment of AI algorithms in cancer imaging is their ability to generalize across diverse patient populations and imaging equipment. Most AI models are developed and validated on limited datasets that may not capture the full variability of real-world clinical data. Differences in geography, demographics, and scanner types can significantly impact the performance of these algorithms. Addressing this challenge requires continuous model updating and rigorous robustness testing on broad patient data to ensure reliable and consistent performance across diverse clinical settings [70,75].

4.2.2. Data Annotation and Model Training

The lack of large, curated, and labeled datasets is another significant limitation, particularly for rarer cancer types. Developing high-quality training data requires substantial human expertise and effort to accurately annotate the relevant imaging features. Strategies such as federated learning, synthetic data augmentation, and self-supervised learning can help mitigate the scarcity of annotated data and enhance the generalizability of AI models [71]. Fostering collaborative efforts among multiple institutions to create open benchmark datasets will be crucial in advancing the field.

4.2.3. Interpretability and Trust

The “black box” nature of many advanced AI models, such as deep neural networks, presents a challenge in terms of clinical interpretability and trust. Physicians and patients alike seek transparency into the decision-making logic of these AI systems, as well as reliable estimates of uncertainty for their predictions. Explainable AI (XAI) techniques that provide clear explanations for model outputs are essential for responsible deployment in cancer care. Actively involving clinicians in the design and development of AI tools, from the initial stages, can foster trust and facilitate seamless integration into existing workflows.

4.3. Evaluation and Regulatory Hurdles

Evaluating the real-world impact of AI in cancer imaging remains a significant challenge. Most studies to date have focused on demonstrating technical per- formance on controlled experimental datasets rather than measuring the effects on clinical outcomes such as diagnostic accuracy, prognostic predictions, and treatment response. Cohort studies that assess the added value of AI for patients are essential to validate the clinical utility of these technologies.

Furthermore, the regulatory hurdles for approving AI-based medical devices constrain the translation of these innovations into clinical practice. Developing appropriate evaluation frameworks and obtaining regulatory approval will be crucial steps in realizing the full potential of AI in cancer care.

4.4. Ethical and Social Considerations

Alongside the technical challenges, the integration of AI in cancer imaging also raises important ethical and social considerations. Ongoing concerns around data privacy, security, and the ethical use of patient data emphasize the need for AI solutions to be transparent, unbiased, and sensitive to the social contexts of implementation [76]. Ensuring data privacy and addressing potential biases in AI models are crucial for gaining the trust of both clinicians and patients, as well as for ensuring equitable healthcare delivery. Collaborative efforts involving researchers, clinicians, policymakers, and patient advocates will be essential in designing AI systems that uphold the principles of ethical and responsible innovation. By prioritizing these aspects, the healthcare community can harness the transformative potential of AI while mitigating the risks and ensuring that these technologies contribute positively to cancer care.

4.5. Advanced Perspectives and Future Directions

The future of AI in cancer imaging is promising, but realizing its full potential will require addressing the multifaceted challenges related to data, workflow, regulation, and sociotechnical contexts. Advances in AI, such as the development of more sophisticated neural networks and improved computational power, will likely enhance the accuracy and applicability of these technologies. Emerging trends in the field include Explainable AI (XAI) for improved clinical interpretability [76], federated learning to address privacy concerns and enhance model generalization [77], the integration of imaging data with genomic information for more personalized treatment plans, and real-time imaging analysis to facilitate immediate decision-making during clinical procedures [78]. Collaboration among researchers, clinicians, and industry stakeholders will be vital in harnessing the full potential of AI to improve cancer care outcomes. By maintaining a focus on ethical implemen- tation and continuous improvement, the healthcare community can ensure that AI technologies contribute positively to the field of cancer imaging and diagnosis, ultimately transforming the landscape of personalized cancer care.

The integration of AI into cancer imaging holds the promise of revolutionizing diagnosis and treatment, leading to better patient outcomes. However, the successful translation of these innovations into clinical practice requires careful consideration of technical, ethical, and practical challenges. Rigorous validation, responsible development, and seamless integration into existing workflows will be essential for realizing the full potential of AI in cancer care. The future of cancer imaging and diagnosis holds immense promise, and the responsible integration of AI technologies will be a pivotal driving force in this transformative journey.

CONCLUSION

The emerging computational techniques, particularly deep learning-based methods, have demonstrated remark- able potential for enhancing the clinical utility of medical imaging data. Convolutional neural networks and other advanced machine learning algorithms have shown promising results in automating the feature extraction and analysis of complex cancer imaging data in an unprecedented manner. However, it is crucial to maintain a rigorous, evidence-based approach to the translation and adoption of these analytical innovations into routine clinical practice. Careful validation through well-designed clinical studies is essential to ensure these computational techniques to reliably improve patient outcomes and garner the trust of clinicians. While the theoretical potential of these imaging analytics is undoubtedly exciting, we must keep a realistic perspective and focus on demonstrating tangible clinical value beyond mere technical advancements. Moreover, to responsibly inte- grate these transformative imaging capabilities into the clinical world, a purposeful collaboration between multidisciplinary experts, including clinicians, computer scientists, and regulatory bodies, is the need of an hour. Only through this holistic, evidence-based approach we can ensure the responsible and effective translation of these cutting-edge computational methods into mainstream medical practice, ultimately enhancing the quality of cancer care and improving patient outcomes. Maintaining academic integrity through rigorous research and validation remains paramount in this endeavor.

AUTHORS' CONTRIBUTION

P.K.S., S.A.: Study Concept or Design; P.K.G.: Data Collection; V.G.: Data Analysis or Interpretation; S.S.: Writing the Paper.

LIST OF ABBREVIATIONS

| AI | = Artificial Intelligence |

| CNNs | = Convolutional Neural Networks |

| GANs | = Generative Adversarial Networks |

| CT | = Computed Tomography |

| MRI | = Magnetic Resonance Imaging |

| HIFU | = High-Intensity Focused Ultrasound |