Early Prediction of Covid-19 Samples from Chest X-ray Images using Deep Learning Approach

Abstract

Aims:

In this study, chest X-ray (CXR) and computed tomography (CT) images are used to analyse and detect COVID-19 using an unsupervised deep learning-based feature fusion approach.

Background:

The reverse transcription-polymerase chain reaction (RT-PCR) test, which has a reduced viral load, sampling error, etc., is used to detect COVID-19, which has sickened millions of people worldwide. It is possible to check chest X-rays and computed tomography scans because the majority of infected persons have lung infections. The COVID-19 diagnosis can be made early using both CT and CXR imaging modalities, which is an alternative to the RT-PCR test.

Objective:

The manual diagnosis of CXR pictures and CT scans is labor and time-intensive. Many AI-based solutions are being investigated to tackle this problem, including deep learning-based detection models, which can be utilized to assist the radiologist in making a more accurate diagnosis. However, because of the demand for specialized knowledge and high annotation costs, the amount of annotated data available for COVID-19 identification is constrained. Additionally, the majority of current cutting-edge deep learning-based detection models use supervised learning techniques. Because a tagged dataset is not required, we have investigated various unsupervised learning models for COVID-19 identification in this work.

Methods:

In this study, we suggest a COVID-19 detection method based on unsupervised deep learning that makes use of the feature fusion technique to improve performance. Based on this an automated CNN model is built for the detection of COVID-19 samples from healthy and pneumonic cases using chest X-ray images.

Results:

This model has scored an accuracy of about 99% for the classification between covid positive and covid negative. Based on this result further classification will be done for pneumonic and non-pneumonic which has scored an accuracy of 94%.

Conclusion:

On both datasets, the COVID-19 detection method based on feature fusion and deep unsupervised learning showed promising results. Additionally, it outperforms four well-known unsupervised methods already in use.

1. INTRODUCTION

The world is currently experiencing “The coronavirus disease-2019,” an even more massive pandemic, after 10 years of influenza, and H1N1 virus pandemic (COVID-19). A global pandemic known as COVID-19 was identified in Wuhan, China, in December 2019. The seventh coronavirus (COV) to be found in people is this one. Being a contagious disease, COVID-19 spreads more quickly when people are near together. Travel restrictions are therefore put in place to contain the virus, which also serves to lower the number of infections. Some preventative measures include regularly washing hands with sanitizers or soap and water; keeping a distance of at least 3 feet from people; and avoiding crowded settings. Meanwhile, fever, coughing, and headache are the most typical infection symptoms. Sputum production, a painful throat, and discomfort in the chest are further symptoms. COVID-19 is rapidly converted into viral pneumonia, which carries a 5.8% mortality risk. The COVID-19 mortality rate is fairly high, and it constantly changes over various periods.

When an infected individual speaks, coughs, or sneezes, the virus can spread easily from their mouth or nasal secretions in the form of tiny droplets. Surfaces are contaminated by droplets or respiratory secretions released by diseased people. Rapid antigen tests (RATs) and reverse transcription-polymerase chain reaction (RT-PCR) are two common diagnostic techniques that provide information about an infection that is currently present. We can learn about the prior infection from an antibody test. Most infected individuals show very few symptoms or are typically asymptomatic and recover over time. However, 15 to 20% of patients typically get serious symptoms and may need to visit an ICU or regular clinics.

It is advised to use RT-PCR to more accurately diagnose COVID-19 in its early stages. However, other studies have discovered that CT scans and X-rays are more efficient because they take less time and aid in the early separation of sick individuals. Since there is presently no known cure for COVID-19, care, and prevention are of the utmost significance. Using image analysis and machine learning techniques, the pandemic's key prevention strategy of “test, track, treat” can be used to identify the infection.

Instead of employing RT-PCR, X-rays can be used to more accurately identify COVID-19 symptoms in the lower region of the lungs. These tests are extremely important in emergency situations where the test report is needed quickly. It hasn't, however, yet been demonstrated to be 100 percent reliable at all times. This method needs to be more efficient and reliable in order to overcome this. Model detection is made simpler, less expensive, and faster by applying AI-based technology. The major objective is to reduce the amount of time needed for detection and the number of kits used, as storing these samples is laborious. CT scans and X-rays make detection and prevention easier and possible.

Machine learning (ML) is now employed in practically every industry as time goes on. Malware detection, healthcare diagnostics, social networking, banking, and other industries all employ machine learning. Deep-learning is a novel machine learning system that makes use of the convolutional neural network principle (CNN). The world's premier computer-vision competition, ImageNet classification, uses deep learning as a key principle. To learn data representation across numerous abstraction levels, deep learning algorithms can compute multiple layers. Using a deep learning model, images, sounds, and text may be categorized. Deep learning algorithms can sometimes enhance human output.

The seventh coronavirus to be discovered in humans is COVID-19. One major downside is that it spreads quickly and can easily become Novel Corona infected Pneumonia (NCIP). There is a 5.8% chance of dying from this. Therefore, an AI-based model that uses deep learning, particularly CNN, has been utilized for disease identification in order to enhance both speed and reliability.

A kind of pulmonary illness known as pneumonia can be brought on by a virus, bacteria, fungi, or any other pathogen. This causes an infection in the lungs that inflames the air sacs, and in many cases, it progresses to a severe condition called pleural effusion because pus-like fluid fills the lungs and makes breathing difficult.

Generally speaking, the fatality risk is around 15% and is frequently observed in unhygienic, densely crowded, and impoverished or developing countries. In this case, early detection is crucial because it can also be lethal without it. Breathlessness, fast breathing, dizziness, and sweating are the main symptoms of this disease.

15% of those infected with COVID-19 have pneumonia, which can quickly progress to the condition known as Acute Respiratory Distress Syndrome (ARDS). As a result, the lungs, which are where oxygen is exchanged with the blood, experience significant inflammation. Lung walls begin to thicken, making it extremely difficult to breathe and exchange oxygen. Affected individuals are most likely to be above 65. As a result, chest X-ray images that show white patches where damage has occurred might be used to make an early diagnosis.

Therefore, the model created can determine whether a patient has COVID or not, as well as whether they have pneumonia or not. The CNN model is utilized because it uses machine learning to identify key properties without human intervention. Additionally, it provides excellent accuracy and understands the relationship between the image's pixels better than any other method.

This model's primary goal is the early diagnosis of the disease and other related lung problems. Some researchers believe that during the early stages of the disease, there will be little to no indications or traces apparent in the X-ray, but the images gathered and categorized contradict this. The primary limiting factor is also the lack of sufficient X-ray pictures to differentiate between viral and bacterial pneumonia. However, this model is essential for identifying lung diseases such as pneumonia and COVID-19.

The remaining sections of this paper are organized as follows; Section 2 contains the literature work. Section 3 provides a description of the material and methodology. Section 4 presents the results and analysis. Section 5 is the conclusion.

2. LITERATURE REVIEW

According to Berrimi et al., [1] chest imaging is essential for the early diagnosis of COVID-19. Patients with chronic illnesses and elderly folks tend to exhibit the worst symptoms. According to research, chest X-rays and CT scans are more trustworthy and useful diagnostic tools, particularly for patients between the ages of 21 and 50. Convolution neural networks are effective for handling enormous datasets, therefore transfer learning is used to get around this. Data augmentation, DenseNet convolution network training, and second trained model DenseNet convolution network application are the three main steps used in this paper. They apply the Dense Net and InceptionV3 model training techniques. The created strategy performed better than the competing models. This essay demonstrates the model's dependability and efficiency.

Multiple convolution neural networks are employed in the ensembling approach that Saha et al. [2] developed. Features concatenation and the decision fusion approach are two of the various techniques employed. Features from the basic models are combined and sent to the categorization header using feature concatenation. The highest voting method is used in the decision fusion strategy to combine many forecasts into a single prediction. This study performs a three- and four-class categorization. The accuracy of the three-class classification is 96%, while the four-class classification is 89.21%. Therefore, utilising a combination of various efficient models can lead to improved performance. Both methods aid in a more accurate classification of the image.

According to a model provided by Mishra et al. [3], COVID-19 can be distinguished from other lung-related disorders. COVID-19 pneumonic, non-COVID-19 pneumonic, having pneumonia, and normal lungs are the four groups that were used. This model has two steps, the first of which divides the image into two different groups. Normal people go into one category, and pneumonic patients into the other. Pneumonia is further divided into COVID and non-COVID patients in the second stage. The CovAI-Net version of the 2D CNN is used to categorise potential Covid-19 patients using the images from their chest X-rays. On X-ray volumes, we found that stage one classification accuracy was 96.5% and stage two classification accuracy was 98.31%.

Asif, and Sohaib, et al. [4] The method described in this paper uses chest x-ray pictures to identify COVID-19 pneumonia patients and improves detection accuracy by using deep convolutional neural networks. Transfer learning can be used with the DCNN-based model Inception V3 to identify coronavirus and pneumonia from chest X-ray pictures. Transfer learning is a technique where a trained model that has already been used to solve one problem is then utilized to solve another. The model predicts an accuracy of 98% (97% during training and 93% during validation).

With a focus on lung imaging, Siddiqui et al. [5] provided a method for detecting COVID-19 using a number of bioindicators. There are different biomarkers for COVID-19 diagnosis, as these images are easily obtained through medical diagnostic techniques like CT scans and ultrasounds. The following four bioindicators are mostly taken into consideration: RNA, a virus or antigen, an IgG antibody, and the lungs are listed in that order. The model provided a sensitivity of 100% when using X-ray pictures, a sensitivity of 90% when using CT scans, and a total accuracy of 94%.

A method for creating fake chest X-ray pictures that contain both positive and negative COVID patients was proposed by Mann et al. [6]. They also mentioned that the GAN (Generative Adversarial Network) model can be used to create images. Before delivering an image to the GAN model, it must be processed. Therefore, preprocessing techniques such as adjusting image size and brightness should be applied to images. Images are resized to 224 × 224 pixels and have their pixels normalized to [-1,1] from [0,255] via the Pytorch vision package. The Generator and Discriminator networks make up the GAN model's two main networks. The generator receives a noise vector of 100 x 1, and it outputs a single image.

A model named COVID-GATNet was presented by Li et al. [7] to aid in automatically diagnosing CXR pictures and speeding up detection. Three chest X-ray scans of healthy subjects, pneumonia patients, and COVID-19 patients are used in this investigation. Geometric transformation operations are used to enlarge the original data. By integrating the DenseNet Convolutional Neural Network (DenseNet) and the Graph Attention Network, the COVID-GATNet model is created (GAT). This model uses the attention mechanism to process data and linkages between layers. The confusion matrix is used to measure the model's accuracy, and further calculations for predicting positive have been made using three different kinds of chest X-ray pictures.

A new model that incorporates StackNet meta-modeling, CNN, and Ensemble Learning was put up by Ridouani et al. [8]. The model is referred to as Covstacknet. All of these models support the extraction of features from X-ray pictures. Patients with COVID-19 frequently have a pattern known as ground glass opacities (GGO). They considered employing X-ray pictures for diagnostics based on this concept. The goal of employing Stacknets is to create a model whose accuracy is improved and whose training errors are decreased. To extract characteristics that are hidden in the original photos, the VGG16 model is used. The first model is used to differentiate between normal pictures and pneumonia infection after training two models.

A Convolution neural network model with three datasets—normal, covid-19, and viral pneumonia—was proposed by Ali Narin [9]. There are numerous levels in CNN, including fully connected dense layers and pooling layers. The extraction of feature maps from images is aided by convolution and pooling layers, and the classification process is always carried out by fully connected layers at the conclusion of a neural network model. A 5-fold cross-validation procedure has been employed to provide a result that is more accurate and reliable. Using Matlab 2020, the data is preprocessed before being supplied to the CNN model and SVM. The SVM model categorizes the input data and generates output based on that. This model is more reliable because it consistently produces excellent outcomes.

The best working model was suggested by Liang et al. [10]. Since there is a very small amount of data accessible, it is necessary to create synthetic images in order to train models and improve results. The translation or conversion of non-COVID-19 chest x-ray pictures to COVID-19 chest X-ray images has been carried out using the cGAN model. The generation of synthetic COVID-19 X-ray chest pictures from the standard chest X-ray images can even be assisted by a U-Net-based architectural model. The major goal of the paper is to translate images in order to expand the dataset that is currently available and boost the model's accuracy. About 97.8% of the predicted model accuracy has been realized.

Our review of the literature indicated a paucity of research publications on unsupervised learning methods for COVID-19 identification using CT and CXR scan pictures, as was mentioned in the literature section. The suggested study used unsupervised clustering to locate COVID-19 in CXR images. The unlabeled data is clustered, and the best features are then extracted using a self-organizing feature map network. A self-organizing feature map is an iterative approach that performs best with a good initial approximation. A small dataset was also employed to show how well the self-organizing feature map worked. Due to the paucity of papers relevant to approaches based on unsupervised learning and the lack of research in this area, we have trained numerous unsupervised learning models and examined their performance.

The main motive of this work is to estimate prognosis and mortality that are equally crucial for COVID-19 as the diagnosis. When COVID-19 incidence peaked in January 2022, there was a global medical capacity crisis that led to an increase in mortality [11, 12]. Deep Learning algorithms for COVID-19 patient prognosis or death prediction will make it possible to allocate scarce medical resources effectively. Additionally, during therapy, helpful information might be given to the medical staff.

Here are the main contributions of this paper:

- For the purpose of detecting COVID-19 from chest X-ray pictures, we created a dataset of 6432 CXR images with binary labels. This dataset can be used by the scientific community as a benchmark. A board-certified radiologist labels the COVID-19 class images, and only those with a clear sign are used for testing purposes.

- On this dataset, we developed promising deep learning models, and we tested them against a test set of 965 CXR images to assess how well they performed. The sensitivity rate for our top-performing model was 98%, and its specificity was 94%.

- In terms of sensitivity, specificity, ROC curve, area under the curve, and precision-recall curve, we presented a thorough experimental examination of how well these models performed.

- Using a deep visualization technique, we created heatmaps depicting the areas that are most likely to be infected with COVID-19.

3. MATERIALS AND METHODOLOGY

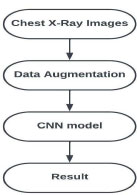

We concluded that machine learning and image processing approaches are the most appropriate for creating a diagnosing covid model after reading the aforementioned literatures and considering their various methodologies. The overall flow chart for this model is shown in Fig. (1), and a dataset of chest X-ray (CXR) pictures is gathered for the purpose of diagnosis using the Kaggle repository.

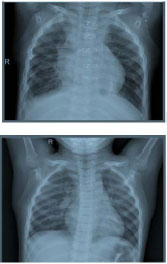

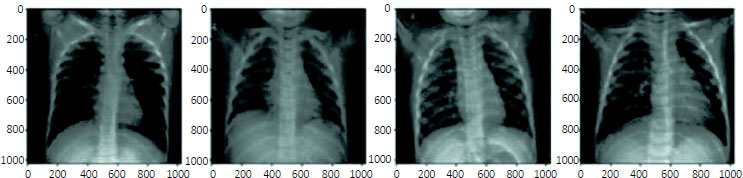

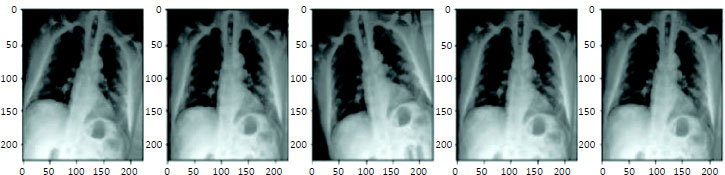

The dataset comprises the X-ray scans of four classes namely COVID-19, pneumonia, non-pneumonia, and normal X-ray images. The dataset consists of a total of 6432 CXR imageries. This dataset is further alienated into two categorical sets i.e., training set and validation set. Where 5467 CXR are used for the training set and 965 CXR are used for the validation set. Among the total training set, 1345 are normal samples, 490 are covid cases and 3632 are pneumonia CXR scans. Similarly, in the validation segment, 238 normal samples, 86 COVID cases, and 641 pneumonia CXR scans were intended for the analysis. Table 1 shows the dataset considered for training and validating the data. The composed dataset is not destined to claim the diagnostic capability of any deep learning model, but to investigate several possible ways of proficiently predicting Coronavirus contaminations using deep learning techniques. Figs. (2-5) depict images of Covid-19 and non-Covid-19 samples and their respective augmentation results.

| Classes | Training | Validation |

|---|---|---|

| Healthy Person | 1345 | 238 |

| Coronavirus Infected CXR | 490 | 86 |

| Pneumonia Infected CXR | 3632 | 641 |

Prior to use, these photos will undergo pre-processing. The images will flip, resize, zoom, and other effects. By gently altering or producing new synthetic images, this data augmentation method expands the datasets that are currently available. The photos are then transmitted through several layers of a convolution model after this procedure. A 5-layer convolution neural network model is built. Both CNN1 and CNN2 models make use of the same 5 layers which are mentioned below. Layer 1: Convolution Layer – This layer applies filter (3x3) on images which helps in extracting the useful features. This performs element-wise multiplication and the computed values are summed. These values are stored in a new matrix which is used for further processing. For the activation of this layer, ReLU function is used which activates one neuron. Layer 2: Pooling Layer – Pooling layer helps in reducing the size of images. This model makes use of a Max pooling layer where out of the specified matrix (2x2) only maximum values are taken and stored in the new matrix used for further process. The above 2 layers are performed twice to get more accurate results. Layer 3: Flatten Layer – The resultant matrix of the max pooling layer is multi-dimensional but the neural network takes only single-dimensional inputs. For the purpose of converting a multi-dimensional matrix to a single-dimensional matrix flatten layer is used. Layer 4: Dense Layer 1 – The dense layer contains a number of neurons that take input from output all the neurons present in its previous layer. This helps to classify images based on the output of convolution layers. 128 numbers of neurons are used and for the purpose of activation, the ReLU function is used and only activates one neuron at a time. Layer 5: Dense Layer 2 – Two neurons are used for the final classification. The activation function sigmoid is used because it suits well for binary valued classification. CNN1 classifies images as COVID-positive and COVID-negative. The CCN2 model classifies images as pneumonia and non-pneumonia.

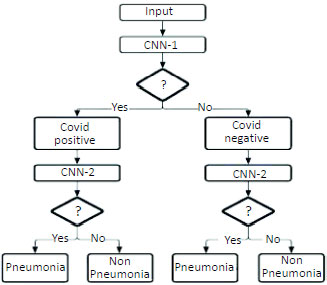

The model will be tested using the validation set once the model has been trained using a set of training data based on this layer. In this model, the difference between COVID- positive and COVID-negative will be made first. The photos will be transmitted to the CNN2 model to differentiate between pneumonia and non-pneumonia based on the aforementioned result. Fig. (6) below depicts two models. Now, anytime a patient's X-ray images need to be tested, an X-ray image is delivered to the trained model mentioned above. The model will diagnose the image based on previously acquired knowledge. The usage of two CNN models, will result in two different levels of accuracy.

4. RESULTS AND DISCUSSION

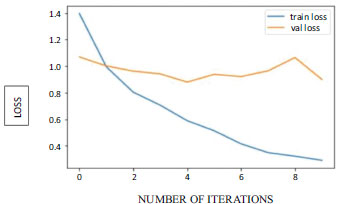

The cost (loss) function gives the difference between the obtained value and the expected value. When increased the number of iterations the value remains high. The training curve is used to check whether the loss is high as shown in Fig. (7). It is the case of underfitting if that does not decrease.

The training loss might come down over the time period resulting in low error values.

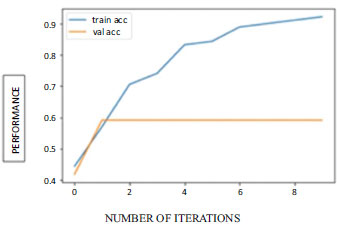

It's the case of overfitting if validation loss decreases until a certain point called the turning point and later starts increasing. The Accuracy graph is shown in Fig. (8).

4.1. Confusion Matrix

The performance of the model helps in distinguishing between COVID-19 and normal and also between pneumonic and non-pneumonic. This is an N x N matrix where the number of target classes is taken as N. The Model will be evaluated by comparing target values with model-predicted values.

Due to binary-valued outputs, we make use of a 2 x 2 matrix.

True Positive (TP): The predicted values will be the same as actual values. But looks into positive values.

True Negative (TN): The predicted values will be the same as the actual values. But looks into negative values.

False Positive (FP): It is a false prediction. The actual values are positive but predicted as negative.

False negative (FN): It is a false prediction. The actual values are negative but predicted as positive.

CNN model 1 helps in the classification of covid positive and negative. This model gives TP as 70 and TN as 45 out of 115 images as shown in Table 2. This helps to identify the performance of the model which is giving 100% accurate results.

| - | COVID -ve | COVID +ve |

|---|---|---|

| COVID -ve | 70 | 45 |

| Covid +ve | 0 | 0 |

CNN model 2 helps in the classification of pneumonic and non-pneumonic. This model gives TP as 40 and TN as 24 out of 64 images as shown in Table 3. This helps to identify the performance of the model which is giving 100% accurate results.

| - | Non-Pneumonic | Pneumonic |

|---|---|---|

| Non-Pneumonic | 40 | 24 |

| Pneumonic | 0 | 0 |

5. CLASSIFICATION REPORT

5.1. Precision

Helps in visualizing the reliability of the model. Defined as the ratio of true positive means correctly classified positive to the total number of classified samples.

5.2. Recall

The recall is calculated as the proportion of accurately recognized positive samples to all positive samples. Recall measures the model's capacity to identify positive samples. The recall increases as more positive samples are found. If the model correctly identifies all positive samples as positive, the recall will be 1.

| - | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| 1.00 | 0.92 | 0.94 | 115 | |

| Accuracy | 0.98 | 115 | ||

| Macro Avg | 0.5 | 0.46 | 0.37 | 115 |

| Weighted Avg | 1.00 | 0.92 | 0.94 | 115 |

5.3. F1-score

Overall model accuracy can be measured using the f1 score. For this, it combines precision and recall. This performs multiplication and addition to get separate values. If the f1 score is good it means the model consists of low false negative and low false positive. Based on values like 1 this helps in considering the model as perfect and also when the value is 0 it shows that the model is a failure.

With a precision of 1.0, a recall of 0.92, and an F1 score of 0.94, the classification accuracy for Covid 19 cases is increased to 0.98 as in Table 4. Similarly, with a precision of 1.0, a recall of 0.91, and an F1 score of 0.93, the classification accuracy for Pneumonic cases is increased to 0.94 as in Table 5. The CNN model that used images to analyse the area for the prediction that could not be satisfactorily explained with structured data alone seemed to have increased performance.

| - | Precision | Recall | F1-Score | Support |

|---|---|---|---|---|

| 1.00 | 0.91 | 0.93 | 64 | |

| Accuracy | 0.94 | 64 | ||

| Macro Avg | 0.5 | 0.455 | 0.465 | 64 |

| Weighted Avg | 1.00 | 0.91 | 0.93 | 64 |

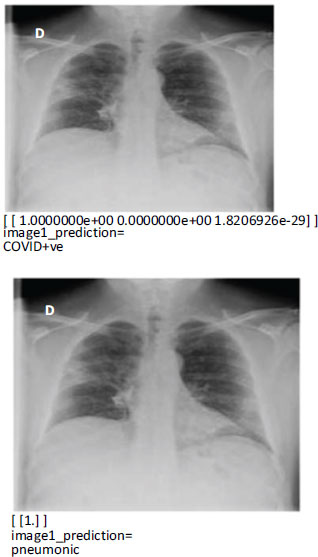

5.4. Model Prediction

Our study is significant because we used only the first chest X-ray data of COVID-19 participants to create a model that predicts early mortality. The predicted images for COVID +ve and Pneumonic diseases are shown in Fig. (9). Early forecasting of patients' clinical outcomes is beneficial for both bed management and treatment. Furthermore, for individuals with severe COVID-19 who are challenging to transfer advanced testing like computed tomography, chest X-rays and laboratory tests are easily accessible. Only using the routinely acquired chest X-ray data from patients with severe COVID-19, we created a deep learning model. We verified the performance enhancement of the ensemble model made possible by combining deep learning models using data from multiple sources, such as X-rays of the chest and electronic health records.

| Reference | Year | Technique | Accuracy |

|---|---|---|---|

| COVID-GATNet: A Deep Learning Framework for Screening of COVID-19 from Chest X-Ray Images | 2020 | Densenet Convolutional Neural Network (DenseNet) and Graph Attention Network (GAT), COVID-GATNet | 91.9% |

| Densenet Convolutional Neural Network (DenseNet) and Graph Attention Network (GAT), COVID-GATNet | 2022 | CovStacknet | 92.7% |

| Classification of COVID-19 from Chest X-ray images using Deep Convolutional Neural Network | 2020 | DCNN | 97% |

| Screening of COVID-19 Using Chest X-Ray Image (Proposed) | 2022 | CNN(VGG5) for COVID-19 cases | 98.12% |

| CNN(VGG5) for Pneumonic cases | 94% |

Comparing the performance of the suggested method to the other three well-known unsupervised methods is shown in Table 6. A strategy based on self-organizing maps is suggested as one of three strategies for COVID-19 detection. In the original studies, many approaches are examined on MNIST and other common datasets. We used CT and X-ray datasets in our work to develop those algorithms and assess how well COVID-19 detection performed. The proposed method outperformed the four currently used unsupervised algorithms for both the CT and X-ray datasets.

CONCLUSION

The results of the literature review provided a good understanding of several models that aid in COVID-19 identification and their corresponding accuracy. We learned about several methods for enhancing data, the usage of bioindicators, Inception-v3 models, and CNN models. One of the quickest approaches is the COVID-19 detection, which uses ML-based techniques. Providing doctors with immediate, accurate results that enable prompt treatment, will contribute to saving patients' lives. Better public health is ensured in the future by increased surveillance and appropriate research on the infections. Early detection facilitates simple tracking and treatment because prevention is always preferable to cure. If AI approaches are used well, test costs will drop significantly and the benefits of the techniques will be realized even if mutations do occur.

LIST OF ABBREVIATIONS

| CT | = Computed Tomography |

| CXR | = Chest X-ray |

| RT-PCR | = Reverse Transcription-polymerase Chain Reaction |

| ARDS | = Acute Respiratory Distress Syndrome |

ETHICS APPROVAL AND CONSENT TO PARTICIPATE

Not applicable.

HUMAN AND ANIMAL RIGHTS

Not applicable.

CONSENT FOR PUBLICATION

Not applicable.

AVAILABILITY OF DATA AND MATERIAL

Not applicable.

FUNDING

None.

CONFLICT OF INTEREST

Dr. Vinayakumar Ravi is the associate editorial board memner of the journal The Open Bioinformatics Journal.

ACKNOWLEDGEMENTS

Declared none.